Context

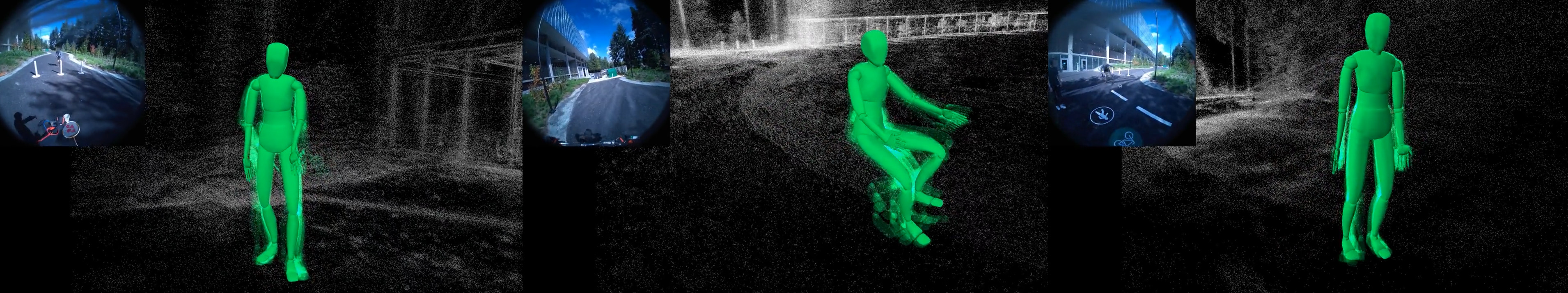

EgoMotion is the 2nd edition of EgoMotion Workshop for in-the-wild human motion modeling using egocentric multi-modal data from wearable devices. We focus on topics and sub-fields in motion tracking, synthesis, and understanding. These research topics have garnered increasing interest and momentum thanks to recent advances in wearable egocentric sensors. Specific topics include:

- Body tracking, synthesis, and activity understanding from egocentric/exocentric cameras

- Body tracking, synthesis, and activity understanding from non-visual wearable sensors, e.g., inertial measurement units (IMUs), electromagnetic (EM) sensors, ultra-wideband (UWB) sensors, radar, and audio.

- Applications of egocentric motion for character animation, simulation, robotic learning, etc.

In addition to algorithms, the workshop aims to promote recent open-source projects to encourage and accelerate research in this field. To this end, we invite researchers to present recent large-scale mo- tion datasets and associated challenges, such as GORP, Nymeria, EgoLife, HP-EPIC, EgoBody, etc. The workshop will also discuss state-of-the-art human motion modeling libraries such as Meta Momentum, present research hardware platforms such as Project Aria, and organize live demos.

Invited Speakers

Schedule

| Time | Event | Speaker |

|---|---|---|

| 13:00 - 13:30 | Opening Talk | Richard Newcombe |

| 13:30 - 14:05 | Keynote |

Ziwei Liu, Ziqi Huang From Egocentric Perception to Embodied Intelligence: Building the World in First Person |

| 14:05 - 14:40 | Keynote |

Angjoo Kanazawa Eye, Robot: Learning to Look to Act with a BC-RL Perception-Action Loop |

| 14:40 - 15:00 | Break | |

| 15:00 - 15:35 | Keynote |

Danfei Xu Human Experience as a Foundation for Robot learning |

| 15:35 - 15:48 | Tech Talk |

Lingni Ma Nymeria++: Upgrade to the Nymeria Dataset with Optimized Motion, 3D Objects and More |

| 15:48 - 16:02 | Tech Talk |

Ruihan Yang EgoVLA: Learning Vision-Language-Action Models from Egocentric Human Videos |

| 16:01 - 16:14 | Tech Talk |

Jian Wang Egocentric Motion Capture and Understanding from Multi-Modal Inputs |

| 16:14 - 16:27 | Tech Talk |

Boxiao Pan Real-World Humanoid Egocentric Navigation |

| 16:27 - 16:40 | Tech Talk |

Gen Li Towards Egocentric Multimodal Multitask Pretraining |

| 16:40 - 16:53 | Tech Talk |

Robin Kips Egocentric Motion Synthesis and GORP dataset |

| 16:55 - 17:00 | Closing |